videoをpeerに送る例と同じ

-- js/main.js

(start)

const stream

= await navigator.mediaDevices.getUserMedia({audio: true, video: true});

// audio, video オプションなし

localVideo.srcObject = stream;

localStream = stream;

(call)

// localPeer, remotePeerとコールバック関数指定

// ontrack でremoteVideo.srcObject = e.streams[0];

localStream.getTracks().forEach(track => pc1.addTrack(track, localStream));

const offer = await pc1.createOffer(offerOptions);

// offerとanswerを直接書き込み

(hangup)

pc1.close();

pc2.close();

pc1 = null;

pc2 = null; // nullにする必要あるか? (念押し)

offer, answerに同じbrowserのtab間での通信 (Broadcast Channel API) を利用。 2つのtabで同時に動かして、それぞれStart。2つのtabでビデオが キャプチャできるらしいのが不思議、どちらの音声を聞いているのかわからん。

-- js/main.js async

通信

const signaling = new BroadcastChannel('webrtc'); // 名前は任意

(event handler)

signaling.onmessage

if (!localStream) return; // not ready (受信だけはだめにしてある)

switch (e.data.type)

offer, // offer受信処理

answer, // ansewer受信処理

candidate, // candidate受信処理

ready, -- if (pc) return; makeCall();

// 自分のPeerがまだなければ

bye, default // 自由に

(start button)

getUserMedia(); signaling.postMessage{{type: 'ready'}};

(makeCall())

(createPeerConnection)

pc = new RTCPeerConnection();

pc.onicecandidate = e => {

const message = {type: 'candidate', candidate: null};

if (e.candidate) {

message.candidate = e.candidate.candidate;

message.sdpMid = e.candidate.sdpMid;

message.sdpMLineIndex = e.candidate.sdpMLineIndex;

// 要確認 (sdpMid, sdpMLineIndexは不要かも)

}

signaling.postMessage(message);

pc.ontrack = remoteVideo.srcObject = e.stream[0];

localStream.getTracks().forEach でpc.addTrack(track, localStream);

// 双方送信

}

offer = await.pc.createOffer()

signaling.postMessage({type: 'offer', sdp: offer.sdp}); // offer送信

setLocalDescription(offer); // localDescriptionに設定

(handleOffer)

const pc = createPeerConnection(); // pcが抜けている (bug?)

pc.setRemoteDescription(offer)

pc.createAnswer();

signaling.postMessage({type: 'answer', sdp: answer.sdp});

pc.setLocalDescription(answer);

(handleAnswer)

pc.setRemoteDescription(answer);

(handleCandidate)

pc.addIcecandedate(candidate); or null set ("" で終りが正しい?)

複雑そう。両方がofferを送る可能性がある場合に使う。 onnegotiationneededが発火

-- js/main.js (import peer.js)

メッセージはiframeをtargetとしてtarget.postMessage()

受信はwindow.onmessage =

オーディオencoding変更、ビットレートなど表示

-- index.html

select "opus", "ISAC", "G722", "PCMU", "red" // opusがベスト

// 描画は ../../../js/third_party/graph.js

-- js/main.js

const codecPreferences = document.querySelector('#codecPreferences');

const supportsSetCodecPreferences = window.RTCRtpTransceiver &&

'setCodecPreferences' in window.RTCRtpTransceiver.prototype;

if (supportsSetCodecPreferences) {

codecSelector.style.display = 'none';

const {codecs} = RTCRtpSender.getCapabilities('audio');

codecs.forEach(codec => {

if (['audio/CN', 'audio/telephone-event'].includes(codec.mimeType)) {

return;

}

const option = document.createElement('option');

option.value = (codec.mimeType + ' ' + codec.clockRate + ' ' +

(codec.sdpFmtpLine || '')).trim();

option.innerText = option.value;

codecPreferences.appendChild(option);

});

// negotiationは直接代入

(call())

new RTCPeerConnecition()

getUserMedia()

gotStream() -- addTrack()

if (supportsSetCodecPreferences)

// SDPの直接書き換え setPtime() は使えるかも

// 統計情報取得

const sender = pc1.getSenders()[0];

if (!sender) {

return;

}

sender.getStats().then(res => {

res.forEach(report => {

動かしながらビットレートを変更 (video only)

-- index.html

select 75, 125, 250, ... unlimited (kbps)

-- js/main.js

navigator.mediaDevices.getUserMedia({video: true})

bandwidthSelector.onchange = // UIイベント

// browserチェック

// "setParameters' in RTCPRtpSender.prototype

const sender = pc1.getSenders()[0];

const parameters = sender.getParameters();

'unlimited'ならdelete parameters.encodings[0].maxBitrate;

parameters.encodings[0].maxBitrate = bandwidth * 1000;

sender.setParameters(parameters)

// setParameters() がだめなら

offer = pc1.createOffer()

pc1.setLocalDescription()

pc1.remoteDescription.sdp の書き換え

pc1.setRemoteDescriotion(書き換えたsdpを含むdesc)

対象はビデオ、Firefoxはdefaultのみ codec is undefined main.js 216。 Chromeはstart後多数の選択肢。ConsoleにSDPパラメータが表示される。

-- js/main.js

// Firefoxはunsupported

const supportsSetCodecPreferences = window.RTCRtpTransceiver &&

'setCodecPreferences' in window.RTCRtpTransceiver.prototype;

// 一覧

if (supportsSetCodecPreferences) {

const {codecs} = RTCRtpSender.getCapabilities('video');

codecs.forEach(codec => {

if (['video/red', 'video/ulpfec', 'video/rtx'].includes(codec.mimeType)) {

return;

}

... appendChild(option) まで

// Error箇所 codecIdの読み取り

const stats = await pc1.getStats();

stats.forEach(stat => {

if (!(stat.type === 'outbound-rtp' && stat.kind === 'video')) {

return;

}

const codec = stats.get(stat.codecId);

// codec = stats.codec?

途中でビデオON (重要かも)

-- js/main.js async

(start)

navigator.mediaDevices.getUserMedia({audio: true, video: false })

gotStream() -- localVideo.srcObject = stream;

(call) -- 通常処理

(upgrad) -- ビデオON

navigator.mediaDevices.getUserMedia({video: true})

(stream => {

const videoTracks = stream.getVideoTracks();

localStream.addTrack(videoTracks[0]);

localVideo.srcObject = null;

localVideo.srcObject = localStream;

pc1.addTrack(videoTracks[0], localStream);

// 再negotiation

pc1.createOffer()

pc1.setLocalDescription(offer)

pc2.setRemoteDescription(pc1.localDescription) // offerでよい?

answer = pc2.createAnswer();

pc2.setLocalDescription(answer);

pc1.setRemoteDescription (pc2.localDescription) // answer?

pc1Local から pc1Remote, pc2Localからpc2Remoteにstream。 1対1が2ペア (メッシュじゃない)

-- js/main.js

pc1Local -- addTrack(), createOffer(),

setLocalDescription, pc1Remote.setRemoteDesc

pc1Remote -- createAnswer()

ontrack = gotRemoteStream1 video1.srcObject = e.stream[0]

pc2Local -- addTrack(), createOffer(),

setLocalDesc, pc2Remote.setRemoteDesc

pc2Remote -- createAnswer()

ontrack = gotRemoteStream2 -- video2.srcObject = e.stream[1]

これだけ

const pipes[];

pipes.push(new VideoPipe(localStream, gotremoteStream));

OfferとAnswerのSDPを表示しつつ変更 (詳細学習用)

offer, answerが普通、UX向上のためのpranswer。 Firefoxにはないかもしれないが、adapterが出すエラーなのでadapter なしならOKかも。

offer = pc1.createOffer()

pc1.setLocalDescription(offer)

pc2.setRemoteDescription(offer);

answer pc2.createAnswer()

// answer (desc) を書き換え

desc.sdp = desc.sdp.replace(/a=recvonly/g, 'a=inactive');

desc.type = 'pranswer';

pc2.setLocalDescription(desc)

pc1.setRemoteDescription(desc)

(accept) // final answer

answer = pc2.createAnswer()

// answer (desc) を書き換え(もとに戻す)

desc.sdp = desc.sdp.replace(/a=inactive/g, 'a=recvonly');

desc.type = 'answer';

pc2.setLocalDescription(desc)

pc1.setRemoteDescription(desc)

getUserMediaで指定するビデオのフレームレート、サイズ。 Get mediaの前に設定。FirefoxではframeRateに小さい値を指定できないかも。 SenderのmaxBitrateを使ったほうがよさそう。

-- 例

sender = pc.addTrack();

var encoding = { maxBitrate: 60000, maxFramerate: 5, scaleResolutionDownBy: 2 }

sender.setParameters({ encodings: [encoding] });

RTCPeerConnection.getStats() を使う

表示が詳しいだけ

Firefoxはだめ (requestVideoAnimationCallback is not supported in your browser. ) 詳しい統計。requestVideoFrameCallback() で遅れを減らせるらしい。

SDPの詳細がわかる。offer.sdpはテキストなので、そのまま表示できる。

outputTextarea.value = offer.sdp;

ダイヤルトーン。目的は電話回線のコール? Firefoxでは音がでない。 alertはなし、原因不明。

(ontrack) gotRemoveStream()

pc1.getSenders();

find audioSender

audioSender.dtmf -- なければalert

audioSender.ontonechange = dtmfOnToneChange;

(dtfmOnToneChange)

sendTones(tones) {

if (dtmfSender && dtmfSender.canInsertDTMF) {

const duration = durationInput.value;

const gap = gapInput.value;

dtmfSender.insertDTMF(tones, duration, gap);

}

RTCPeerConnectionのpropertyを表示するだけ signalingState connectionState iceConnectionState 他に、peerIdentityが有用?

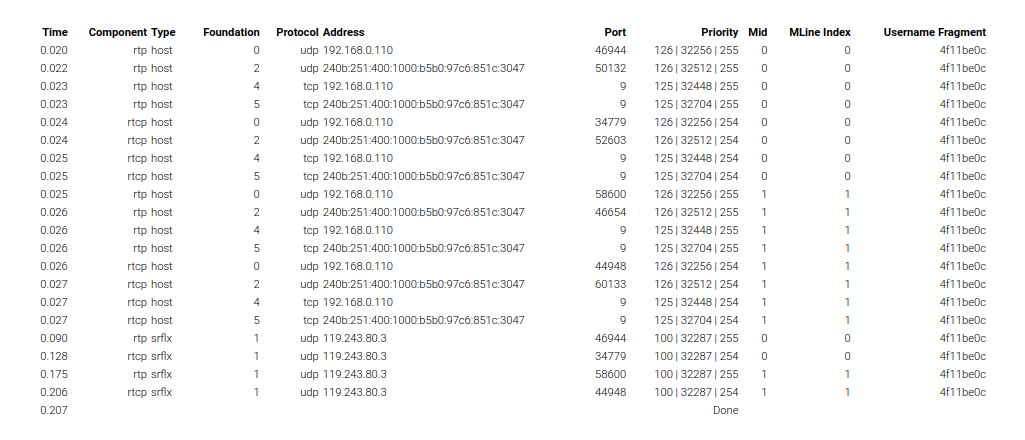

これはじっくり読んで試す必要がある。 mic/cameraの使用許可を与えないと無意味な表示になるようだ。 defaultのstun:stun.l.google.com:19302 が機能しているかも。 使用中のグローバルIPアドレスが表示される。

表示例

-- js/main.js

// default server (select option)

o.value = '{"urls":["stun:stun.l.google.com:19302"]}';

o.text = 'stun:stun.l.google.com:19302';

// キャッシュ (localStorage) に記録

const allServers = JSON.stringify(Object.values(serversSelect.options).map(o => JSON.parse(o.value)));

window.localStorage.setItem(allServersKey, allServers);

//

// Create a PeerConnection with no streams, but force a m=audio line.

getUserMedia() // addTrack is not necessary

const config = {

iceServers: iceServers, // STUN, TURN or '' (local)

iceTransportPolicy: iceTransports, // all, (public), relay

iceCandidatePoolSize: iceCandidatePoolInput.value // optional

};

// peerIdentity // default null

const offerOptions = {offerToReceiveAudio: 1}; // deprecated?

pc = new RTCPeerConnection(config);

pc.onicecandidate = iceCallback; //

candidates.push(event.candidate.candidate)

pc.onicegatheringstatechange = gatheringStateChange;

// if (pc.iceGatheringState === 'complete') getFinalResult()

pc.onicecandidateerror = iceCandidateError;

offer = pc.createOffer(offerOptions);

pc.setLocalDescription(offer);

// 本質は

pc1.createOffer(offerOptions)

単にAudioContextをつかっただけ

-- js/webaudioextended.js

(constructor)

window.AudioContext = window.AudioContext || window.webkitAudioContext;

this.context = new AudioContext();

(start)

BiquadFilter highpass

(applyFilter)

mic = this.context.createMediaStreamSource(stream)

peer = this.context.createMediaStreamDestination();

// stream -- mic -- filter -- destination

(renderlocally)

// mic -- (filter) -- this.context.destination

-- js/main.js async

const webAudio = new WebAudioExtended(); // in webaudioextended.js

webAudio.loadSound('audio/Shamisen-C4.wav'); // これが鳴らない?

(start)

webAudio.start();

(handleSuccess) // 普通にPeer接続

toggleRenderLocally()

-- webAudio.renderLocally(renderLocallyCheckbox.checked);

handleKeyDown() -- webAudio.addEffect();

受信側でオーディオのスペクトル表示

-- index.html

localVideo playsinline autoplay muted

remoteVideo playsinline autoplay muted

canvas

-- ../../../js/third_party/streamvisualizer.js

-- js/main.js async

pc2.ontrack = gotRemoteStream;

gotRemoteStream(e) {

remoteVideo.srcObject = e.streams[0]; // なぜか再生されない

const streamVisualizer = new StreamVisualizer(e.streams[0], canvas);

streamVisualizer.start();

}

適当になんども現在時刻を取得して使う

const elapsedTime = window.performance.now() - startTime;

Codec preferences: ChromeはOK、Firefoxはだめ Scalability Mode: どちらもだめ。未実装?